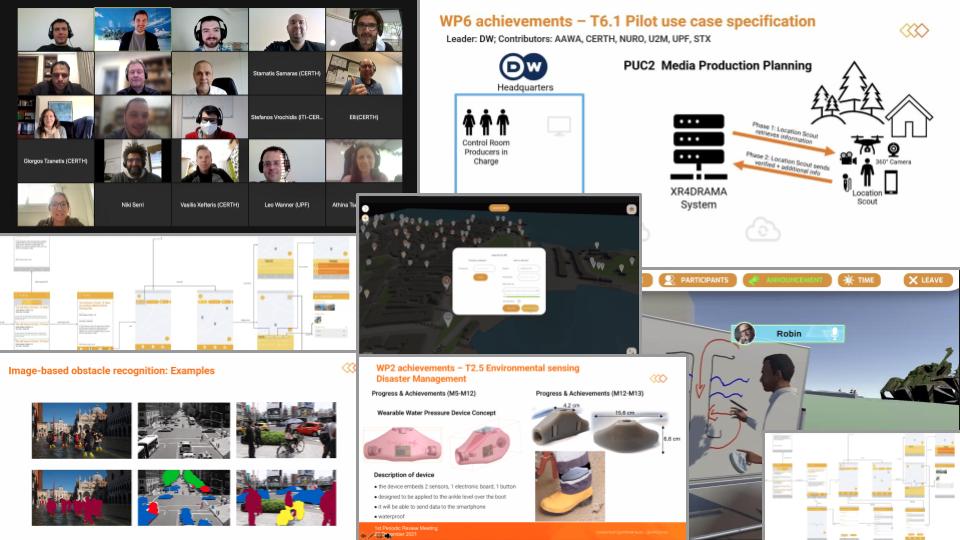

Wow. We’ve already completed more than 50% of XR4DRAMA, and the week before Christmas 2021 saw us pass our first official review. So it’s actually a good time to give you a quick summary of all the tech we’ve been putting together and reflecting on – and to let you what’s coming next.

Over the course of XR4DRAMA’s first 12+ months, the consortium has achieved the following in terms of technical research and engineering:

Backend tools/modules

- development of automatic web data collection tools able to retrieve various, heterogeneous online resources, e.g. social media posts, web videos, or weather forecasts

- recording of drone footage (thousands of images and videos for the media production use case captured on Corfu, Greece)

- establishment of a satellite service able to exploit satellite data from the sentinel hub (true color, all bands, DEM*) for specific areas and time spans; the data is turned into a rough 3D terrain model which can be used in the VR or geo services applications

- conduction of a feasibility study focused on the wearable/portable environmental sensors needed in the use case scenarios

- creation of a new smart textile design that aims to implement existing physiological sensing system solutions (based on user requirements); the garment can be worn under civil protection uniforms.

- training of a machine learning model able to detect the stress levels of the users wearing the physiological sensing system

- development of a stress-level detection module based on audio-signals

- development of visual analysis modules (initial versions) that support the following functionalities:

- shot detection

- scene recognition

- emergency classification

- photorealistic style transfer

- building and object localization

- integration of an ASR module into the XR4DRAMA platform

- development of an NLG module that turns data into English and Italian text

- implementation of a preliminary text analysis pipeline for English and Italian

- development of an initial XR4DRAMA ontology that matches available information to semantic entities (e.g. “sensor and audio data for first responder Maria indicate a high stress level, text analysis based on social media input detects emergency situation close to Maria’s location” -> “We need to pull her out, she’s in danger!”); semantic reasoning is applied on top of the knowledge base in order to elaborate on:

- geographical data (whereabouts of citizens, safe areas)

- temporal data (what happened when?)

- risk reports (to update/warn users)

- finalization of a (constantly running) 3D reconstruction service that is able to

- receive multiple requests (images, videos)

- generate a 3D model

- simplify the produced mesh

- provide the result to XR4DRAMA users

- completion of the geo service modules (initial version, incl. geoserver and GIS); data from OSM, opengov.gr and AAWA are aggregated and organized via categories and subcategories that address the user requirements; existing data can be updated/extended (also via user frontend tools)

User/frontend tools

- development of an AR app (1st version)

- improves situation awareness of first responders, location scouts, and other users in the field by providing relevant platform data (e.g. geospatial information)

- allows for bilateral communication: both users in the field and control room staff can send updates

- offers the following first set of user features: add/edit POIs, upload multimedia files, add comments, manage project, manage user profile

- offers 2D maps and an initial AR view (e.g. display of POIs, POI info, and navigation on top of real world view)

- exploits mobile phone sensors (GNSS, IMU, compass) to help users in the field navigate and make sure control room staff can locate them

- development of a VR authoring tool (desktop app) as the core of XR4DRAMA; it allow users to

- create projects

- create various virtual environments

- add points of interest (which can be viewed globally, i.e. with all XR4DRAMA tools)

- add other users

- development of a collaborative VR tool that connects to the VR authoring tool; it allow user to

- ingest OSM maps

- interact/work with virtual props (provided by the app)

- experiment with various virtual cameras/drones (media production use case)

- development of a mobile application for the public; it allows users to:

- create/submit text reports

- create/submit audio reports

- automatically submit their geolocation when they send a report

- get updates regarding the general situation (SA notification system)

Outlook on 2022

With all this under our belt, we can now put a focus on system integration, fine-tuning, and – of course – a lot of user testing. Laptops, headsets, and smart devices have already been procured and set up to thoroughly check the prototype applications. We’ll be back with more info on this soon.

In the meantime, make sure to follow us on Twitter and/or become part of our user group.

To learn about XR4DRAMA in detail, you can also head over to our resources page.

*all abbreviations used in this and other posts are explained in our

Guide to the XR4DRAMA Alphabet Soup.